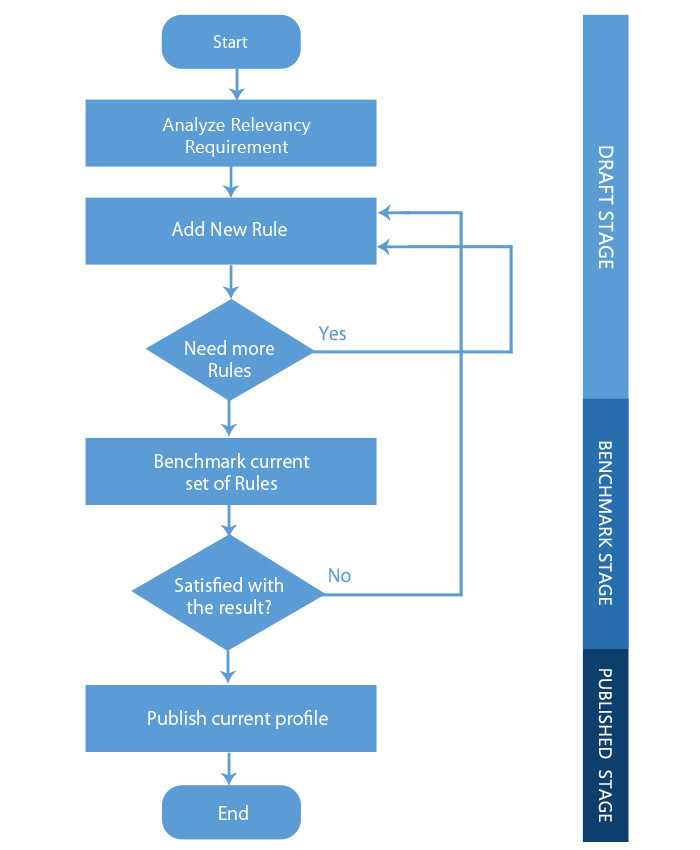

Relevance Workflow

In order to formalize the process of creating relevancy rules there is a set workflow each Relevancy Model has to go through. This workflow will help the user to create a better set of rules that have been field tested before being deployed. The workflow consists of 3 stages.

Stage 1: Draft

This is the first stage all Relevancy Models are born into. Here the user is free to add, remove and modify rules as they deem fit. This model plays no part in defining the systems relevancy and is not part of any search query. Once a draft model is benchmarked, it enters its Benchmarked stage.

Stage 2: Benchmark

A benchmarked Relevancy Model is a model where its rules have been tested against a set of search queries. This produces a set of metrics that will give an indication as to where this model stands in comparison with other Relevancy Models. The user is free to add, remove and modify rules as required. A benchmarked model still plays no part in defining the system’s relevancy and it’s not a part of any search query. If the user wants to use this model or set of relevance rules as part of their search queries, then the model needs to be published.

Stage 3: Publish

A published Relevancy Model is a Model that has been elevated to be part of the user's search process. The rules set within a published model is applied by default for all search queries. There can be multiple published models (at global scope as well as at local scope). At any given point, there can only exist two default models, i.e. one global default and one local default. If user does not have any local default model, then for all his queries, rules from the global default model will be used.