Benchmarking

Tuning the System to Meet your Search Requirements

What is Benchmarking?

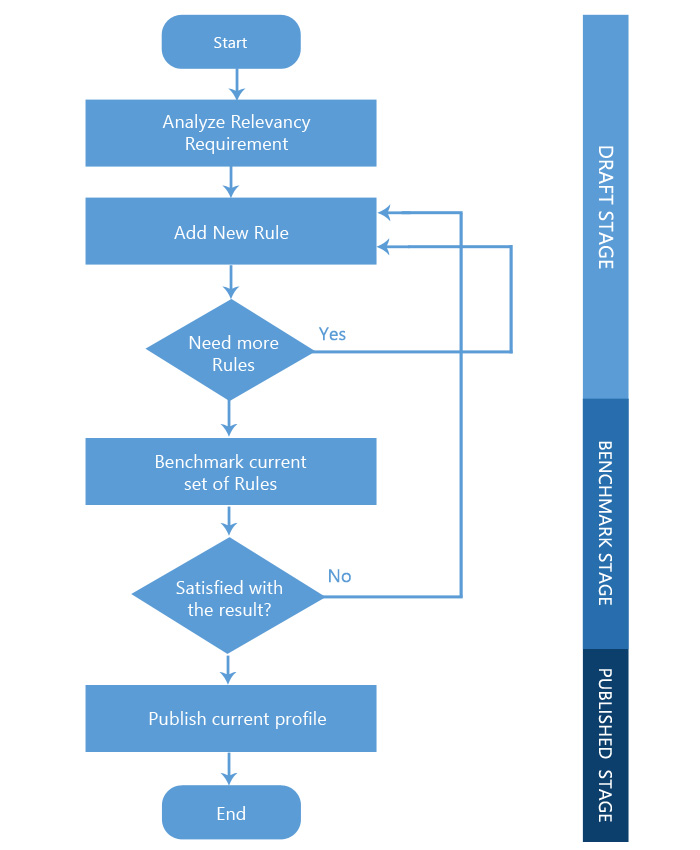

Benchmarking is the second part of the two-part workflow for achieving a mature search platform.

The purpose of Benchmarking is to test a Relevancy Model, which is a collection of rules. These rules are executed against a collection of test queries that have been carefully curated by the users of the system. This yields a series of metrics and statistics which are compiled into easy to understand graphs and tables which will help the user determine how that Relevancy Model stacks up to the system or other locally created test suites.

Why do we need Benchmarking?

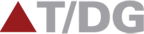

Benchmarking helps us to analyze the Relevancy and Performance of a search engine. Benchmarking helps us identify the gaps and required improvements.

How do I Benchmark?

Benchmarking score can be one of the following:

- System Level

-

Test Suite Level

Before you proceed to benchmark the system, the System must have at least one Test Suite which is a collection of test search queries that have been set by the users. In order to create a Test Suite, first you need to understand the Test Suite Anatomy.

You can run the Benchmark either from the Test Suite Manager tab or the Model Analyzer tab.

- Running the Benchmark from Test Suite Manager Tab Here you need to select the Benchmark Option from the Tools menu. The scope for this Benchmark operation will depend on what you have selected in the Test Suite panel on the left.

- Running Benchmark from Model Analyser: To Run Benchmark from Model Analyzer, you first need to select the scope from the drop-down and then click on 'The Run Benchmark' button. On the popup screen you need to provide the Model name against which you want to benchmark the queries.

Since this can be an operation intensive task, only one model can be benchmarked at a time. A message will be shown when the processing is done and the metrics have been calculated for the current Model.

How do I see the benchmarking statistics?

In the Model Analyzer screen, you need to select the scope for which the analyze metrics are required. The drop-down contains a list of Test Suites and the default selected scope is “System” which covers all the metrics from the public Test Suites. Changing the value in the drop-down will trigger the tables to reflect the data for the selected scope.

Note: System/Test Suite must have been benchmarked once for data to be generated.

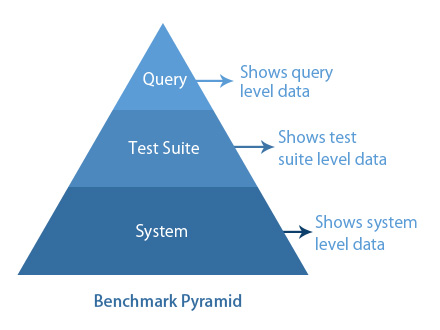

Benchmarking metrics can be seen for 3 different scopes as follows:

- System Scope

- Test Suite Scope

- Query Scope

1. System/Public Scope

Benchmarking at System scope will execute all the queries present in the Public Test Suites against a selected Relevancy Model and will calculate the current Relevancy and Performance metrics of those Test Suites. System Benchmark might become quite an operation intensive task depending on the number of queries present in the Public Test Suites. These queries are evaluated based on user provided ratings. The rated documents inside Snapshot are considered as expected results and they are matched with the actual query result. Based on this analysis, certain Relevancy and Performance metrics are calculated.

2. Test Suite Scope

Benchmarking at Test Suite scope will execute all the queries present in Public Test Suites. It is a lighter process as compared to System Benchmark because it only runs all the queries present in a given Test Suite against a selected Relevancy Model.

3. Query Scope

Unlike System Level and Test Suite Level, Query Level benchmark is not done explicitly. Whenever the system or a test suite is benchmarked, the queries present under that score are executed.

What is the detailed Benchmarking workflow?

A detailed benchmarking workflow is as shown below: